MU Peeves Thread

-

AI is so boring to RP with.

-

I use ChatGPT exclusively to write my TS poses.

-

New anxiety unlocked, that someone using chatgpt is getting more RP than you.

-

-

@Pavel Honestly I’m just impressed. You have to really work at it to get ChatGPT to come up with anything smutty, after aggressively reassuring it that all parties are consenting to the joyous experience.

-

@Tez said in MU Peeves Thread:

@Pavel Honestly I’m just impressed. You have to really work at it to get ChatGPT to come up with anything smutty, after aggressively reassuring it that all parties are consenting to the joyous experience.

Hey, I never said it was good TS. Lots of mentions of panning to <insert weird adjective choice> fireplaces.

-

I have given serious thought recently to having ChatGPT or similar fill out chargen apps for me. I just can’t bring myself to do it. I’ll RP until I pass out but write a background? Going on month 4 and no progress.

-

@Babs I’ve definitely used these virtual intelligence tools to come up with concepts or seeds for me to expand on. Not a whole backstory or character, but bits and pieces I can use to write something about - otherwise I fall into the trap of making the same character for the tenth time.

-

I would rather read the worst background in the world than a ChatGPT app and I can’t imagine I’m alone. It fills me with despair.

-

A ChatGPT app can’t even come close to the appreciation I have for the person who apped into a modern-day horror game with a character whose dark backstory is that he killed the dinosaurs.

-

-

@Third-Eye said in MU Peeves Thread:

I do make use of AI detection tools, both free versions and a paid one I subscribed to after Too Many Of These Incidents For Me, but mostly they’re confirmation for me when something feels REALLY off. There’s tons of AI generated stuff that’s actually been edited or was just a touch-up on something a human wrote that I don’t and would never notice. I associate LLM with being over-long and flat yet also weirdly effusive, mainly, but it’s usually not ‘bad’ writing, as such. It’s weird because it’s not ‘written’ at all, it’s word-generated-after-word. ‘Simple’ language that’s also repetitive due to that generation is probably the rhythm of it that twigs me the most. This article mentions it and some other tells.

https://readwrite.com/how-to-tell-if-something-is-written-by-chatgpt/

IDK, I think there’s also a ‘scales falling from your eyes’ quality when you know this stuff is becoming widespread (presuming it bothers you, I guess). Once you actually start to look for it, you start to see it when it’s obvious, and a lot of the time people don’t bother not to make it obvious.

AI detection tools are only really useful when you’re operating in a culture where use of generative AI is considered inappropriate in the first place. That said, there’s an appropriate and an inappropriate use of LLMs in the context of creative writing, and at least in my own view, it has very little to do with how obvious it is. The most inappropriate use is to rely on it to do all of the creative parts for you, not really forming an idea of what it should be writing for you before it does. This is usually worse than merely obvious, it’s unreadable. What it produces is trash, it’s not worth the time to try to absorb it.

The two more appropriate uses that I can think up are one, invoking its hallucinatory impulse to brainstorm with you before you write the actual prose of one of the ideas it gives you, or perhaps something derivative of that. That use case doesn’t require much elaboration, so I’ll move on to the other use case of expounding upon what you already have. In this case it can function almost like having a personal assistant.

An example of the latter case: I write a monthly TTRPG-and-variety magazine, and we use a system t hat’s entirely homebrewed. It’s inspired by WoD, Diablo 2, Magic: the Gathering, and several other things, not so much in terms of setting but in terms of game design. One of the things we do when we release a new setting is we write a variety of new “Proficiencies,” which analogize to Skills in CofD/WoD terms. These Proficiencies are specific to the setting we’re in. We want these Proficiencies to be written in a certain format, which is consistent between settings, but have the style of writing change from one setting to the next.

We hand-write short versions of these Proficiencies. We instruct the LLM to write a long-form version of each one in that format. We also instruct it to not use the same phraseology over and over again, and instruct it to rely on its context window of previously-generated Proficiencies for clues as to which phraseology and sentence structure to avoid repeating over and over again. This works beautifully for us. Most of the Proficiencies written come out pretty damn good.

All of this leads to the other two factors in appropriate use of LLMs in the context of creative writing. They are:

Actually paying attention. Generative AI can produce some great stuff and make one way more productive as an author or as an illustrator. However, it can also produce a lot of trash. This means you have to proofread the LLM output, examine the Stable Diffusion dump. It also means you’re going to have to fix the parts of it that are flawed in some way or other. Don’t sit there fiddling with the prompt for 30min when you can just fix the output by hand in 30sec. Edit the sixth finger out with your tablet.

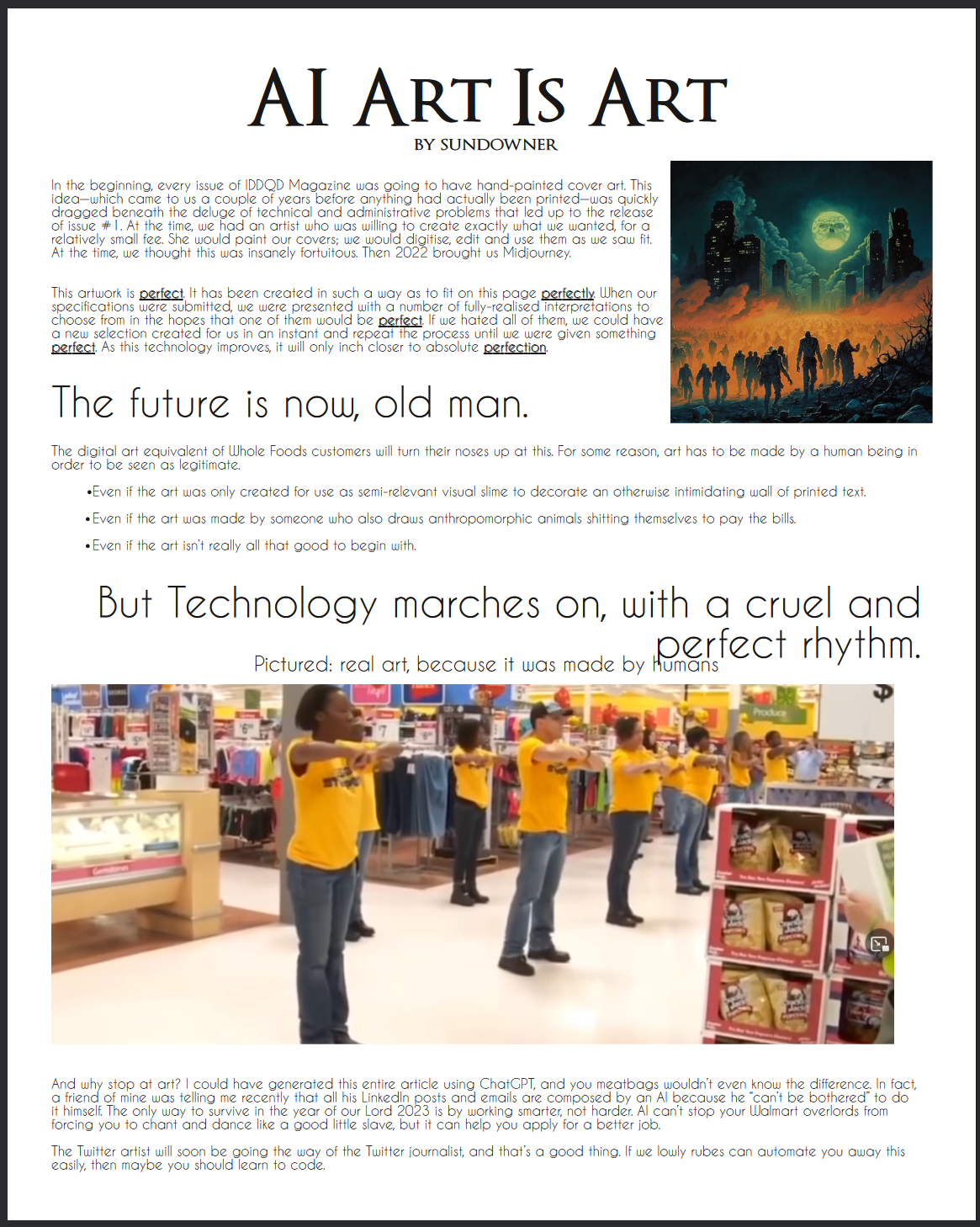

The other factor is honesty. Don’t pretend you aren’t using LLMs or generated illustrations. If you are open about what you’re doing, it’s harder for people to fault you for it. Don’t want to read content that might have been generated by an AI? Don’t buy this product. Not all of what we publish is made by an AI, and basically none of it is made exclusively by an AI, but we’re not going to go through the trouble of labeling which parts are which or to what extent a certain part relied on AI, because the philosophy we’re working with is that it’s the results, not the means, that matter. What we aim to put to print are things worth reading; things worth looking at. And that’s irrespective of how it was made. We explicitly published this point in our April 2023 issue here, with this spread (left and right page):

We also run articles about prompt-writing and scripting LLMs to do things like chargen for you given a ruleset and a concept, where it is guided, step by step, through each part of the process and relies on its context window to consider the choices it already made while making future choices that are consistent with the concept and with the already-chosen options. This technique has proven quite effective. Another one we wrote is on fine-tuning Stable Diffusion based on a particular artist’s body of work. We may run one on fine-tuning local LLMs, but they aren’t very good yet, so we’re holding off on that.

The above issue in particular paid my mortgage that month. Since then, our editing has gotten way better. We’re – and it is a we since I’m not the only person here – of the view that AI-generated content is the future, but that it will also never replace human editorial vision. It’s here to stay, and so are us humans.

-

I’m interested in that magazine-slash-newsletter.

-

@Narrator

Of course using AI tools is expedient. At this point, everyone involved in the conversation recognizes two things: AI tools are expedient to use and they stand to make the people creating and using them a lot of money. No one is arguing these points.I’m glad you’re being transparent about your business practices, but the complaint in this thread is about people who are not being transparent. To use your Whole Foods analogy, you may not care where your eggs come from and that’s fine. Keep buying your 5 dozen egg trays. However, if someone thinks they’re buying the pasture-raised free range eggs and when they open the carton, they are, in fact, the same eggs from the factory farm, that just ain’t right.

-

@Trashcan said in MU Peeves Thread:

@Narrator

Of course using AI tools is expedient. At this point, everyone involved in the conversation recognizes two things: AI tools are expedient to use and they stand to make the people creating and using them a lot of money. No one is arguing these points.I’m glad you’re being transparent about your business practices, but the complaint in this thread is about people who are not being transparent. To use your Whole Foods analogy, you may not care where your eggs come from and that’s fine. Keep buying your 5 dozen egg trays. However, if someone thinks they’re buying the pasture-raised free range eggs and when they open the carton, they are, in fact, the same eggs from the factory farm, that just ain’t right.

Yeah. Well, that’s an issue of false advertising, which is a form of fraud. So there’s definitely basis for outrage there, or at a minimum irritation depending on how much it really matters.

If you want a real critique of generative AI, it’s that the out-of-the-box solutions right now aren’t very good. If your goal is to make something cool and interesting, they’ll produce something formulaic to the point of being droll. Quite a bit of effort goes into wrangling and contorting them to produce that interesting thing, which requires editorial vision.

You can still automate away the drudgery of production, though, if you do go through that trouble. That’s for certain. And it’s also definitely not going away no matter how angry people get about it.

@Tez said in MU Peeves Thread:

@Pavel Honestly I’m just impressed. You have to really work at it to get ChatGPT to come up with anything smutty, after aggressively reassuring it that all parties are consenting to the joyous experience.

This is because the entire GPT line of LLMs is designed to be inoffensive. OpenAI’s content moderation philosophy is basically that the produced text must be safe according to an HR or PR executive. This means no graphic violence. It means no sex. It means no -isms or -phobias. And so on. Its output comes off as sanitized, because it IS sanitized. This is great if you’re a corporation who wants to automate job rejection letters that feel somewhat personalized without running afoul of anti-discrimination law, but it’s awful if you’re a novelist trying to make it take the broad strokes of your plot and fill in the blanks, because everything interesting in the human experience is all-but-guaranteed to piss hall monitor bureaucrats off.

There are LLMs that can be run locally with a commercial-grade GPU, like those downloadable GGUF models. However, in order to use them effectively for a purpose other than an HR-safe one, you need to fine-tune it on the kind of content you want. If you want smut, you’ll need to fine-tune it on bodice rippers. If you want violence, you’ll have to fine-tune it on military science fiction or horror. If you want -isms or -phobias, you’ll have to train it on /pol/ or your uncle’s bowling alley GC. If you want some mix of all of the above, you’ll need to train it on all of the above.

There is a similar issue with commercial image generation models like Midjourney and DALL-E, where they refuse to make explicit violence or pornography, and to an extent don’t like making women with certain body types because they’re too titillating. If you’re hoping to make an avatar of a woman with a Christina Hendricks build, that’s an uphill battle. It’s so frustrating that in our circle we’ve come to calling Midjourney “Midjanny,” since it’s acting as the fun police.

-

I am happy to concede that AI can be fun and useful in appropriate contexts. This is not one of those contexts. I do not want to rp with AI.

-

@Narrator said in MU Peeves Thread:

@Third-Eye said in MU Peeves Thread:

I do make use of AI detection tools, both free versions and a paid one I subscribed to after Too Many Of These Incidents For Me, but mostly they’re confirmation for me when something feels REALLY off. There’s tons of AI generated stuff that’s actually been edited or was just a touch-up on something a human wrote that I don’t and would never notice. I associate LLM with being over-long and flat yet also weirdly effusive, mainly, but it’s usually not ‘bad’ writing, as such. It’s weird because it’s not ‘written’ at all, it’s word-generated-after-word. ‘Simple’ language that’s also repetitive due to that generation is probably the rhythm of it that twigs me the most. This article mentions it and some other tells.

https://readwrite.com/how-to-tell-if-something-is-written-by-chatgpt/

IDK, I think there’s also a ‘scales falling from your eyes’ quality when you know this stuff is becoming widespread (presuming it bothers you, I guess). Once you actually start to look for it, you start to see it when it’s obvious, and a lot of the time people don’t bother not to make it obvious.

AI detection tools are only really useful when you’re operating in a culture where use of generative AI is considered inappropriate in the first place.

Yeah, and I think the point of this specific conversation is that a lot of people want it to be culturally inappropriate in this hobby. There are already games that make it explicitly not allowed to use generative AI in writing, and so sometimes they have to use tools to help confirm suspicions.

The above issue in particular paid my mortgage that month. Since then, our editing has gotten way better. We’re – and it is a we since I’m not the only person here – of the view that AI-generated content is the future, but that it will also never replace human editorial vision. It’s here to stay, and so are us humans.

So AI can replace writers, just not editors???

@Narrator said in MU Peeves Thread:

@Trashcan said in MU Peeves Thread:

@Narrator

Of course using AI tools is expedient. At this point, everyone involved in the conversation recognizes two things: AI tools are expedient to use and they stand to make the people creating and using them a lot of money. No one is arguing these points.I’m glad you’re being transparent about your business practices, but the complaint in this thread is about people who are not being transparent. To use your Whole Foods analogy, you may not care where your eggs come from and that’s fine. Keep buying your 5 dozen egg trays. However, if someone thinks they’re buying the pasture-raised free range eggs and when they open the carton, they are, in fact, the same eggs from the factory farm, that just ain’t right.

Yeah. Well, that’s an issue of false advertising, which is a form of fraud. So there’s definitely basis for outrage there, or at a minimum irritation depending on how much it really matters.

It was pretty much the whole point of this particular conversation thread on this particular board: this is MU Peeves, it’s about people using LLM on a MU, not LLM in general.

If you want a real critique of generative AI, it’s that the out-of-the-box solutions right now aren’t very good. If your goal is to make something cool and interesting, they’ll produce something formulaic to the point of being droll. Quite a bit of effort goes into wrangling and contorting them to produce that interesting thing, which requires editorial vision.

You can still automate away the drudgery of production, though, if you do go through that trouble. That’s for certain.

Is “the drugery of production” here…writing? Writing words in our writing hobby?

And it’s also definitely not going away no matter how angry people get about it.

ChatGPT doesn’t need you veering off in a conversation just to shill for it.

@Tez said in MU Peeves Thread:

@Pavel Honestly I’m just impressed. You have to really work at it to get ChatGPT to come up with anything smutty, after aggressively reassuring it that all parties are consenting to the joyous experience.

This is because the entire GPT line of LLMs is designed to be inoffensive. OpenAI’s content moderation philosophy is basically that the produced text must be safe according to an HR or PR executive. This means no graphic violence. It means no sex. It means no -isms or -phobias. And so on. Its output comes off as sanitized, because it IS sanitized. This is great if you’re a corporation who wants to automate job rejection letters that feel somewhat personalized without running afoul of anti-discrimination law, but it’s awful if you’re a novelist trying to make it take the broad strokes of your plot and fill in the blanks, because everything interesting in the human experience is all-but-guaranteed to piss hall monitor bureaucrats off.

There are LLMs that can be run locally with a commercial-grade GPU, like those downloadable GGUF models. However, in order to use them effectively for a purpose other than an HR-safe one, you need to fine-tune it on the kind of content you want. If you want smut, you’ll need to fine-tune it on bodice rippers. If you want violence, you’ll have to fine-tune it on military science fiction or horror. If you want -isms or -phobias, you’ll have to train it on /pol/ or your uncle’s bowling alley GC. If you want some mix of all of the above, you’ll need to train it on all of the above.

There is a similar issue with commercial image generation models like Midjourney and DALL-E, where they refuse to make explicit violence or pornography, and to an extent don’t like making women with certain body types because they’re too titillating. If you’re hoping to make an avatar of a woman with a Christina Hendricks build, that’s an uphill battle. It’s so frustrating that in our circle we’ve come to calling Midjourney “Midjanny,” since it’s acting as the fun police.

Buddy, it was just one person making a joke, it doesn’t need paragraphs of explanation.

-

Please no LLM proselytizing in this MU forum, jfc. Get it out of here.

-

@Tez said in MU Peeves Thread:

@Tez said in MU Peeves Thread:

I am begging game-runners to stop using ChatGPT.

I AM BEGGING PLAYERS TO STOP USING CHATGPT.

I’LL STOP USING IT WHEN GAMES STOP ASKING FOR DESCS

Disclaimer: If it wasn’t obvious; this is a joke, I use it to get ideas for a desc and then actually write one. As much as I absolutely loath to write it.

-

@Testament said in MU Peeves Thread:

@Tez said in MU Peeves Thread:

@Tez said in MU Peeves Thread:

I am begging game-runners to stop using ChatGPT.

I AM BEGGING PLAYERS TO STOP USING CHATGPT.

I’LL STOP USING IT WHEN GAMES STOP ASKING FOR DESCS

This is a solved problem if you recycle the same PBs and the same @descs over and over again!