AI Megathread

-

@dvoraen This is one of my favourite reads lately: https://ludic.mataroa.blog/blog/i-will-fucking-piledrive-you-if-you-mention-ai-again/

-

@Hobbie I love that one.

Friend quoted me this recently:

"It’s like ChatGPT has read everything on the internet, and kind of vaguely remembers some of it and is willing to make up the rest.”

There are so many documented instances of LLMs making up nonsense. Citing books that don’t exist. Making up fake lawsuit citations. Misrepresenting articles written by journalists. Making up fake biographical details. The code it spits out is often garbage (or, worse, wrong in subtle ways). And that’s not even touching on all the random stupidity where it tells people to use glue in their pizza or incorporate poison into their recipes.

The whole GenAI industry is most likely just a big bubble built on a con.

-

People call this “hallucination”. I think we should stop letting them assign a new name to an existing phenomenon. The LLM is malfunctioning. It is saying things that are wrong. It is failing to do what it was designed to do.

-

-

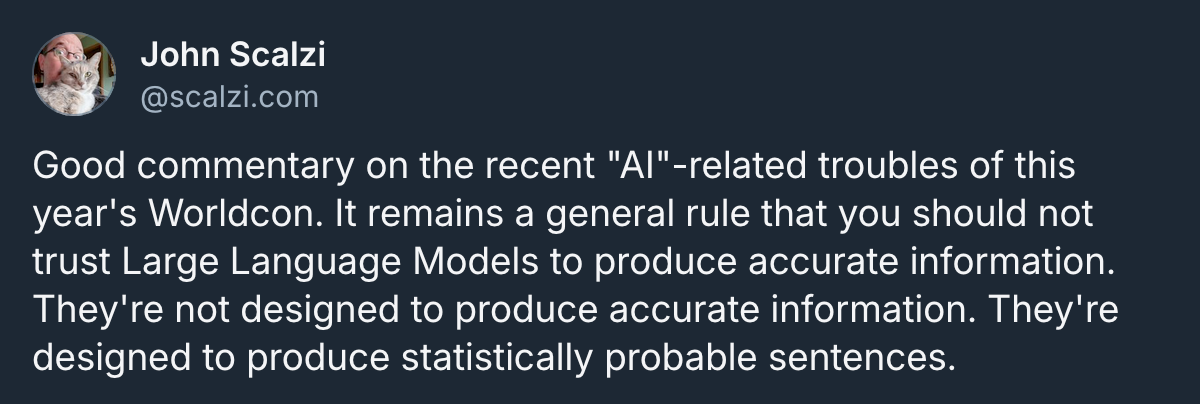

“They’re designed to produce statistically probable sentences.”

Exactly. Sometimes what is statistically probable is also correct: “What is the capital of France?” will most likely correctly tell you “Paris” because Paris has a high statistical association with “capital of France”.

But this methodology is inherently unreliable for giving facts. A LLM might confidently assert that George Washington cut down a cherry tree, just because there’s a common association between Washington and that story, even though historians largely believe it’s a myth. Elon Musk associated with Teslas + Teslas associated with car crashes + Elon Musk associated with a car crash leads to a LLM erroneously asserting that Elon Musk died in a fatal Tesla crash. Sure, it’s a statistically probable sentence, but it’s just not true. The LLM doesn’t know whether something is true, and it doesn’t care.

-

@Faraday said in AI Megathread:

The LLM doesn’t know whether something is true, and it doesn’t care.

I know this may seem like a quibble, but I feel it’s an important distinction: It can’t do either of those things, because it’s not intelligent. It’s a very fancy word predictor, it can’t think, it can’t know, it can’t create.

-

As more and more time passes and AI becomes more and more widespread all I can think is, “When do we declare our Butlerian Jihad?” Because I’m kinda over all this stuff already lol.

-

The AI problem is now so bad that people are trying to defeat it with… more AI. I don’t even know. What is life.

What I do know is that if someone fed my poses into ChatGPT or similar, I’d be pretty pissed off about it.

-

Is it sad that I’ve met people whose natural writing and RP are so bad that I assumed they were LLMs incorrectly?

-

spotted in the wild, made me lol at least as much as it made me groan.

-

@Pavel said in AI Megathread:

@Faraday said in AI Megathread:

The LLM doesn’t know whether something is true, and it doesn’t care.

I know this may seem like a quibble, but I feel it’s an important distinction: It can’t do either of those things, because it’s not intelligent. It’s a very fancy word predictor, it can’t think, it can’t know, it can’t create.

(This very obvious rant is not directed to anyone in particular but I still feel like it needed to be said…)

Neither can a computer. It can’t think, it can’t know, it can’t create any more than an AI can. It can’t get nearly as close as AI can. But every person here still uses computers. No one’s given up the machine and accessories filled with toxic metals that have been clawed from the earth as quickly as possible without regard for the environment it might be disturbing for the purposes of profit. Metals that are so valuable they mean the potential end or continuation of wars that kill hundreds of thousands with body counts that go up every day that possession of these metals aren’t agreed upon or bargained away to stop the death and dying. Metals that will go into these computers we all use that will likely return to the earth to sit in a landfill forever with parts that will never biodegrade in exchange for being of limited, non-thinking, non-knowing, non-creative use for just a few short years.

Everyone here also uses the internet with all the filth and hate and disgusting things that can be found online. Darkwebnuffsaid. The same internet that contributed to the death of newspapers and the decline of journalism as well as people’s recognition of truth and facts. The same internet that allowed Amazon to put countless small businesses out of business across the world. But no one here has stopped using that. Everyone is still contributing online to the companies that track and store massive amounts of information on as many people as possible on servers around the globe that devour energy like a bottomless pit and exude heat like a hell-connected furnace, then weaponize that information for profit and political leverage so intense it can turn elections in the most powerful country in the world, very specifically by weaponizing fear and hate and turning those dials up to 11 on people’s internet feeds to sway votes with no regard for what those people might do with their fear and hate outside of the voting booth.

How is it that AI is the problem?

You can’t be serious.

Please.

One might think you are being just a tad too…

Critical.

But okay. Rant done. Get on your computer and tell me on the internet how AI is so problematic you won’t use it.

I’m totally listening.

-

@MisterBoring said in AI Megathread:

Is it sad that I’ve met people whose natural writing and RP are so bad that I assumed they were LLMs incorrectly?

-

@Jynxbox said in AI Megathread:

But okay. Rant done. Get on your computer and tell me on the internet how AI is so problematic you won’t use it.

I can. I did in previous posts, but I’ll recap briefly: There is a benefit/harm ratio to every invention mankind has ever made.

Harnessing fire can bring warmth, but it can also destroy. Splitting the atom can power a nuclear power plant or wreck untold destruction. The internet contains filth, but it also powers information, education, and human connections.We have to gauge tools based on how they are used. GenAI, by my estimation, brings tremendous harm and next to zero actual good. Every use case I’ve seen - from customer service chatbots to research - is terrible compared to the human counterparts it’s driving out of business.

We also, traditionally, have gauged tools based on their legality. Napster brought free music to millions. Some saw that as a good thing, but it was illegal, and it was stopped. The GenAI industry is committing copyright infringement on a scale that would make Napster blush.

There are many people who boycott Amazon, social media, or whatever based on the harms they perceive. There are environmentalists who would scold me for the plastic soda bottles I use. We’re all allowed to pick our battles. Fighting GenAI is one of mine.

-

@Juniper said in AI Megathread:

People call this “hallucination”. I think we should stop letting them assign a new name to an existing phenomenon. The LLM is malfunctioning.

No, it is not. What the AI spits out is meaningless to the AI. It just spits out what the most likely next set of words are based on the input it received and what it has already given. For it to malfunction, it needs to start writing sentences devoid of grammar. As long as what it writes is grammatically correct and a somewhat rational statement, it has succeeded

It is saying things that are wrong.

Yes, but factually accurate answers are not the purpose. Grammatically correct sentences are.

It is failing to do what it was designed to do.

No. It is doing exactly what it is designed to do. It’s failing at doing what entrepreneurs, marketers, and other PT Barnum snake oil salespeople want the public to think it can do.

EDIT: To actually contribute something to the thread, about the only thing I am willing to use AI on for MU purposes is played-bys and maybe creating images on the wiki for the various venues on the grid. I have grown tired of seeing Jason Momoa as the image for Seksylonewulf McRiptabs #247.

EDIT 2: Typoes.

-

@Faraday I boycott Amazon because AWS absolutely sucks.

I’d explain more but I have to go write more Lambda functions while closing every pop-up telling me to use Q to streamline my processes.

-

@Jynxbox said in AI Megathread:

@Pavel said in AI Megathread:

@Faraday said in AI Megathread:

The LLM doesn’t know whether something is true, and it doesn’t care.

I know this may seem like a quibble, but I feel it’s an important distinction: It can’t do either of those things, because it’s not intelligent. It’s a very fancy word predictor, it can’t think, it can’t know, it can’t create.

(This very obvious rant is not directed to anyone in particular but I still feel like it needed to be said…)

Neither can a computer. It can’t think, it can’t know, it can’t create any more than an AI can. It can’t get nearly as close as AI can.

This seems like a strange separation: AI is run on computers. A computer is simply a larger tool that you can run all sorts of smaller tools on.

A computer can know some things, if you program it to do so. If you program a calculator, you instruct it on immutable facts of how numbers work.

If GenAI successfully reports that 1+1=2, all it’s saying is that a lot of people on the internet have mentioned that’s probably the case. It’s searching a massive database of random shit and finding a bunch of instances where someone mentioned the text “1+1” and seeing that a bunch of those instances ended with “=2”. It’s giving you a statistically probable sentence. Due to this, it’s ridiculously, laughable easy to manipulate.

The calculator on your computer knows that 1+1=2 because it knows what 1 is, and it knows what addition is, and it knows how to sum two instances of 1 together. Computers are very good at following strict rules and working within them when they are programmed to do so. And computers are very good at analyzing and iterating, and people have written really effective automation and AI tools (of the non-generative variety) to do that over the years.

But yes: as you said, computers can’t produce raw creation. Which is kind of the point being made.

-

-

@Roz said in AI Megathread:

If you program a calculator, you instruct it on immutable facts of how numbers work.

I mean… kinda? A calculator app doesn’t really know math facts the way a third grader does. It doesn’t intuitively know that 1x1=2. It just responds to keypresses, turns them into bits, and shuffles the bits around in a prescribed manner to get an answer.

I don’t point that out to be pedantic, but just to further contrast it with the way a LLM handles “what is 1+1”. Like you said, it’s based on statistical associations. It may conclude that 1+1=2 because that’s most common, but it could just as easily land on 1+1=3 because that’s a common joke on the internet. LLMs contain deliberate randomization to keep the outputs from being too repetitive. This is the exact opposite of the behavior you want in a calculator or fact-finder. And if you ask it some uncommon question, like 62.7x52.841, you’ll just get nonsense.

Now sure, some GenAI apps have put some scaffolding around their LLMs to specifically handle math problems. But a LLM itself is still ill-suited for such a task. And when we understand why, we can start to understand why it’s also ill-suited for giving other accurate information.

-

@MisterBoring now I have to add to my +finger I don’t use LLMs to write my poses sometimes I just suck as a writer

thx

thx -

@Faraday said in AI Megathread:

@Roz said in AI Megathread:

If you program a calculator, you instruct it on immutable facts of how numbers work.

I mean… kinda? A calculator app doesn’t really know math facts the way a third grader does. It doesn’t intuitively know that 1x1=2. It just responds to keypresses, turns them into bits, and shuffles the bits around in a prescribed manner to get an answer.

Yeah, sorry, my point is more that – computers know as much or as little as they’re programmed to know. The calculator is given strict rules to calculate input, whereas LLMs are literally just guessing at a probable answer.