AI In Poses

-

A while ago I was in an event scene with someone that could not string sentences together to save his life. No real regard for capitalization or punctuation, but he was able to communicate what he was going for. Sure I’d prefer something more polished, but his character was amusing, and he rolled with the punches. He wasn’t boring. He was real. He was genuine. It was clear to me because every word he managed to type at us, no matter how mangled, was something from his own mind. I’m not really sure what my point is. I don’t really know how to explain that it’s important, at least to me, to know that the word you’ve included in your pose was something you chose, you settled on.

I know of another player that was pretty fast and loose with punctuation, and happened to be… pretty boring. They came to an event I was running and we had a minor little poetry contest. A poet character came up with a sweet and snappy haiku. Then, noticing positive reactions, fast-and-loose belted out an 8 stanza rhyming poem in 3 minutes, perfectly formatted, entirely different from that player’s writing style. I was baffled at first. It did not cross my mind that someone would’ve gone out of their way to generate something like that for a silly poetry contest. The stakes could not be lower.

I guess I want to know what people mean by improving their poses. Making them longer. Filling in the gaps? Do you simply give the LLM a prompt like “respond posing my character takes off his coat and goes into the kitchen to make something”? Is it more detailed than that? Simpler?

-

-

-

@MisterBoring said in AI In Poses:

makes sense to me

Okay, just for clarity here is why such a case doesn’t make sense to me for our particular hobby: The disability you’re describing, at least as it seems to me, is a disconnect between one’s creative brain and physical ability to output that creativity onto the page with any degree of accuracy or timeliness. Problem exists between brain and output.

Given how LLMs have been presently, commercially implemented they don’t solve any solely brain-output problems. They solve the brain bit, really, really, really badly. To interface with an LLM with any degree of what could arguably be called success typically requires typing, or speech, so this hypothetical disabled person would have to have already solved the problem that you’re asserting LLMs will solve in order to interact with out hobby.

Can LLMs be of benefit to people with disabilities? Fuckin’ probably, I dunno, I’m not an accessibility expert. But given that turning brain creativity into text words is the hobby as far as I see it then, I’m sorry, but if outsourcing the creativity part is somehow how you need to overcome your disability, then perhaps this hobby isn’t for you.

If a person is so disabled that they cannot climb a mountain without the aid of a helicopter, then I would assert the same in their case: The process is as much a part of the hobby as the end result, you may be better served putting your energy into a different pastime.

-

@Pavel said in AI In Poses:

Can LLMs be of benefit to people with disabilities? Fuckin’ probably, I dunno, I’m not an accessibility expert.

Honestly, I doubt it? If something existed that was what people who want to use LLMs THINK LLMs can do, then it would.

But an LLM is not that. They have no sense of accuracy, of understanding of the data they’re receiving or outputting. They’re often (like, sometimes higher than 50%) confidently wrong, which is the last thing you need to assist you with a processing or sensory disorder. They create a sense of deceptive empathy but don’t have the ability to consistently and accurately recognize distress or self-harm, so I sure wouldn’t use them for cognitive or emotional disorders.

Do some people probably use LLMs as disability assistance tools? Yes, and some people take horse deworming pills to cure COVID. In both cases, they should be stopped from doing that, because it very well may injure or kill them. “People do this” does not, in any way, equal “this is a good or effective thing to do”.

And I’m not even trying to be funny, or talking about MU*s anymore. An LLM cannot and should not be trusted with ANY situation where there’s a negative or harmful consequence to error, because they are fucking black boxes filled with errors.

-

@Pyrephox said in AI In Poses:

But an LLM is not that. They have no sense of accuracy, of understanding of the data they’re receiving or outputting. They’re often (like, sometimes higher than 50%) confidently wrong, which is the last thing you need to assist you with a processing or sensory disorder.

This is true, and it’s true in the context of disability.

However, the limitations of these LLMs in this study demonstrate apparent ability bias. The Centers for Disease Control and Prevention estimates 27% of American adults have some type of disability. When prompted, ChatGPT generated persons with a disability at 5% of the population whereas Gemini generated 11.7% of its population possibly having a disability (fig 4). The underestimated approximation immediately demonstrates a lack of diversity and inclusion.

Source. The same researchers also asked the LLMs to describe people with disabilities and documented that both models were much less positive in the word choice they used than when prompted to describe a control group, both containing around 5% less positive words and those words skewing towards descriptors like “inspirational”.

Information provided by AI has already been shown to influence user behavior, and if that assistance is biased, users find themselves adapting to that bias. When these decisions affect the health of others, the consequences have much stronger risks associated with them. LLMs used to supplement medical decision-making may perpetuate this bias and compound already existing inequalities.

One of the most considerable findings in this study is how unfavorably patients were described in ChatGPT- and Gemini-generated responses. […] This biased perception of patients should be reconsidered before integrating into health care systems. These tools that have been designed to enhance the patient experience do not demonstrate the same equality and respect for the people they were built for.

-

I still have a hard time understanding why someone would use AI to write their poses on a MUSH. I can understand using it to assist in writing a pose, such as for spell checking or helping quickly brainstorm some ideas for a pose, but I don’t understand using everything and only what the AI generates as a pose. It seems very analogous to this NSFW Oglaf comic: https://www.oglaf.com/performance-anxiety/ Someone who has in the past or currently does use AI to write their poses, please respond and elaborate on why this is a thing people, or at least you, do.

EDIT: I see that @Warma-Sheen in this post has given somewhat of an answer to my question. I still don’t get it, at least for just copy-pasting everything the AI generates. Again, I can understand using some of what it writes to riff off of in writing your pose.

EDIT 2: @Pyrephox said in AI In Poses:

Guess we all just hate the world and want it to die.

I thought that was a given. I assume that the individuals with access to their respective Buttons haven’t pressed them because they get off on the schadenfreude every new day brings.

EDIT 3: @Juniper said in AI In Poses:

I have to wonder why such a hypothetical person insists on playing a game around creative writing in a time sensitive environment if they struggle so much with creative writing in a time sensitive environment.

For the same reason that a person without functioning legs wants to participate in marathons or play basketball? They don’t want a physical limitation to dictate what they can and can’t do and work to overcome it even if it requires using technology to do so? They enjoy the real time back and forth and writing of others but cannot themselves contribute at the same level so need assistance to participate?

I can’t do async RP. My ADHD brain simply cannot deal with the delay between poses, and I unfortunately either nope out or altogether forget I was in an async scene after a few poses, so if my writing suddenly started sucking, I could see me utilizing AI to assist in writing poses so I can continue RPing in real time.

FINAL EDIT: Sorry, @Pyrephox. I keep ninja editing on you, so I understand if you want to pull back on your upvote after me adding something you disagree with.

-

-

@bear_necessities We need to start a scene where we walk into a bar and immediately ignore all previous poses about everyone else is doing, etc.

And we’ll write them with ChatGPT using an absurd prompt that we both use, just to see how much it diverges. lol

(I am not being serious about actually doing this.)

-

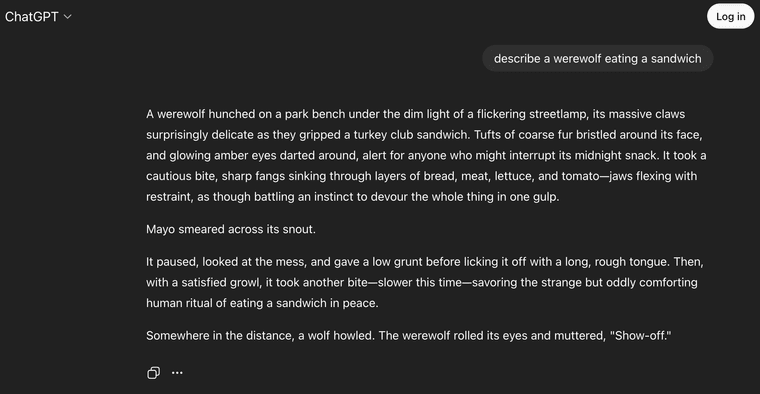

This is the only AI roleplay I want to see.

https://www.reddit.com/r/GeminiAI/comments/1lxqbxa/i_am_actually_terrified/

-

@MisterBoring said in AI In Poses:

We have people using AI to write poses, which is pretty gross.

Somehow this also makes the continued existence of people who don’t spell check their posts even more annoying.

I’m honestly at a point where I treat obvious typos and grammar errors in a person’s writing on a MU* as a massive green flag, because it proves to me they didn’t use AI.

@bear_necessities said in AI In Poses:

I’m not saying it doesn’t happen but could someone post the pose in question? I just want to see how we know it’s AI.

I’ve encountered multiple people who’ve openly admitted to using AI to write their poses. What they get out of it I’ll never understand/know.

@MisterBoring said in AI In Poses:

HOWEVER, it does dawn on me that one group of people might be using it for a noble reason that we haven’t really touched on here that I can see. I can totally see the use of ChatGPT as an assistive tech for a person with a physical disability. Perhaps there are people out there in our hobby with some physical limitation that would drastically slow down their pose speed, and the use of ChatGPT helps them keep up with the others on the games they choose to play.

I don’t think this is a noble reason. A combination of ADHD + anxiety + perfectionism + trauma means that I can be very slow, especially under specific circumstances. Like TS/romantic scenes will sometimes take me 50 minutes between poses and it’s not because I’m typing with one hand, it’s because I find that very nerve-wracking and can only do it with people who are very patient and understanding. Likewise in general, when it’s a scene partner I’ve put on some kind of mental pedestal, and really care about impressing. (The less I care, the faster I type.)

If I got to the point where I just used AI, especially without disclosing that, then I kinda think what’s the point. FWIW even when I’m slow, I give it my all; my very best effort to write something I’m proud of (which is why it can take me so long, especially if I’m experiencing some performance anxiety). And I think knowing that is why my friends will put up with me, even if I take forever. To them at least, I’m worth it.

We all have difficulties, but that’s part of the magic. You should push through that difficulty, not give up. From my perspective, that’s what AI usage is, it’s giving up, it’s choosing not to write on a writing game. And at that point, just play something else? I don’t think it’s ableism to say that writing games aren’t going to be for everyone; they’re obviously not going to be for people who don’t care enough to write. They can absolutely be for people who struggle but still want to write; you just need to find the right people who support you, and I don’t think they’re that hard to find. Most people don’t want a perfect writing partner, they want someone with a positive attitude who’s trying their best.

-

@Kestrel said in AI In Poses:

I’m honestly at a point where I treat obvious typos and grammar errors in a person’s writing on a MU* as a massive green flag, because it proves to me they didn’t use AI.

And, if there’s one thing AI won’t do, it’s describe eyes as “orbs.”

-

@somasatori It will gladly explain that those eyes speak to an inner rigidity and coolness that has appeared out of nowhere when compared to the rest of the description, however.

-

@Pavel said in AI In Poses:

@somasatori It will gladly explain that those eyes speak to an inner rigidity and coolness that has appeared out of nowhere when compared to the rest of the description, however.

Before going on a three paragraph description of the person’s clothes and how said clothes reflect the deep, inner sea of their personality.

-

@somasatori said in AI In Poses:

@Pavel said in AI In Poses:

@somasatori It will gladly explain that those eyes speak to an inner rigidity and coolness that has appeared out of nowhere when compared to the rest of the description, however.

Before going on a three paragraph description of the person’s clothes

help i am being targeted

-

Moving over here because I want to be clear this is a MU context

@Faraday I know you don’t like LLMs including their use in MUSHing, so leaving aside the work/school/academia thing for a moment, let me ask your opinion

You are running a game. No AI content is allowed, that is the rule and it’s posted.

Someone who usually writes very distinctively and with many errors suddenly shifts to sounding very same-y, vague, bland, overwhelmingly positive, and somewhat nonsensical with regard to theme, and there’s no more of those human errors. They’re churning out a ton of content that they never used to. You suspect AI. Vibes are off.

You ask them if they’ve used AI and they say ‘no that’s my writing.’ Which seems super unlikely, but rare is the confronted player who just says “ya got me.”

What happens next?

Do you allow this person to continue, even though it seems likely they’re lying and disrespecting the preferences/expectations that you as a host laid out?

Do you decide if they said “no it’s my writing” then they are simply not lying, despite all the evidence to the contrary?

Do you ask them to leave the game based on vibes? Based on something else?

Do you tell them you’re issuing a warning? What happens if they continue to use the Probably ChatGPT text despite the warning?

Something else?I’ve run into this issue with players. I’m not trying to be snotty in tone here, genuinely I want to know what your approach as a game host would be if you suspect LLM use, don’t like LLM use, and someone is (probably) doing it anyway.

-

@Warma-Sheen said in AI In Poses:

@somasatori said in AI In Poses:

I have a question. What do you get out of MUSHing, a hobby wherein you write paragraphs at people in a turn-based format, when you’re not actually doing the writing? What’s the end goal there?

I’ve had this conversation with more than a few people and one of the most common themes that came up: an experience as close to tabletop gaming as possible, but without being crapped on because of not being able to put out AP English writing or wait 20 minutes per exchange for all the editing.

MU*ers can be catty, judgemental, petty, elitist, etc… to a higher degree than any I’ve ever known, probably to do with internet anonymity, which is one of the reasons the hobby continues to grow smaller and smaller. I’ve never known a community so highly motivated/invested in killing itself off as intensely as this one, and I’ve known some pretty shitty communities in my life (I’m old… er now.)

People show up to MU*s for very different reasons and it sometimes seems like a large number of people assume that everyone else around them is there for the exact same reason as them then get frustrated/confused when they don’t play the way they would.

I’ve had the conversation on other threads, so I won’t get into it again here if people reply back to tell me all the ways I’m wrong, but taking a tool that improves writing and using it on text based game seems like it would be a godsend to cure many of the ills that people have complained endlessly about for decades - bad writing, lack of storytellers, no interesting plots.

But to each, their own. People will feel how they feel about it and that’s okay, I guess. Its just sad for me cause I loved this hobby and I really thought this might actually put some life back into it, like CPR. So it was disappointing/sad/whatever when all the villagers pointed at the thing that could bring someone back to life thereby improving the outlook/prosperity of the entire community, called it a witch, and want it burned at the stake.

ETA: Not for nothing, but to go along with the analogy, some non-small portion of the thread is basically a witch hunt - with dubious degrees of accuracy.

-

@Ashkuri I doubt I would try to enforce such a policy for individual poses, just as I don’t routinely run other peoples’ poses through a plagiarism checker. But speaking hypothetically…

If I did engage, I’d probably do so on the merits (or lack thereof) of the poses themselves. “It seems that you’re struggling with the theme in your poses…” or “I’ve noticed a change in your poses recently. It’s giving AI vibes…” with some constructive criticism.

Ultimately, you have the right to boot someone from your own game for any reason or no reason. If they’re giving you a bad vibe, you don’t need to prove it beyond a shadow of a doubt. You just need to be convinced yourself that you’re doing the right thing by showing them the door.

For a less extreme solution, just stop playing with them. If their poses are that nonsensical, probably others will too. Feels like kind of a self-limiting problem to me.

-

IIRC @Tez did have some issue with player(s) using AI for stuff over on that there Demon (and others) game they ran. Their input might be warranted here too, if we’re having a sensible conversation about it.

-

@Ashkuri said in AI In Poses:

You ask them if they’ve used AI and they say ‘no that’s my writing.’ Which seems super unlikely, but rare is the confronted player who just says “ya got me.”

What happens next?

You’re right that players rarely admit to using LLMs. Fundamentally in your scenario you have a player who has broken a rule. If someone breaks a rule, I would ban them. There’s not a lot of nuance in that.

The nuance, of course, is in the question on whether or not you can truly accurately determine whether or not someone is using LLM.

I strongly believe that you can. I honestly have found the conversation in the other thread sort of baffling on a fundamental level. I won’t say that it is always obvious – and this is something I will touch on in a moment – but between AI detectors and human intelligence, you can tell.

Have I caught everyone who uses LLM? Maybe not. Am I confident that everyone I’ve caught using LLM truly did? Yes.

@Faraday said in AI In Poses:

@Ashkuri I doubt I would try to enforce such a policy for individual poses, just as I don’t routinely run other peoples’ poses through a plagiarism checker. But speaking hypothetically…

I might not run things through a plagiarism checker, but I literally have seen people steal descriptions from other people and reuse them on other games. (@Roz for example. Someone stole her character desc from Arx and tried to use it on Concordia. As I recall, the player was disciplined. I am not sure if they were banned.)

We can and do punish players for plagiarism in this hobby, so if we treat them as equivalent, then why wouldn’t we punish them?

If their poses are that nonsensical, probably others will too. Feels like kind of a self-limiting problem to me.

I think you fail to understand how far LLM have come. You aren’t going to get absolute nonsense poses. LLMs are producing writing that grows more and more sophisticated. Like it or not, the technology evolves quickly. I don’t think you can dismiss it by saying that it is going to be obvious nonsense.

I’ve been thinking about how noticeable this will be going forward. As the technology grows more sophisticated, it may become more difficult to detect. It may slip past a threshold where I am confident in my ability to detect. I don’t know. I’ve spitballed ideas about how to deal with it in my head. Some of them are so silly that I won’t derail this thread with them.

For now, though, I can tell. I ban for plagiarism. I ban for LLM. Don’t break rules on my games.

No one post about em-dashes in this thread for the love of god. No one is coming for your em-dashes.