AI Megathread

-

@Faraday

There was cheating before AI and there were false accusations of cheating before AI detectors. Being falsely accused of using AI is no more serious than being accused of plagiarism.What is the alternative?

-

@Trashcan I think you’re underestimating the psychological effect that takes place when people trust in tools. There’s a big difference between “I think this student may have cheated” and “This tool is telling me this student cheated” when laypeople don’t understand the limitations of the tool.

I’ve studied human factors design, and there’s something that happens with peoples’ mindsets once a computer gets involved. We see this all the time - whether it’s reliance on facial recognition in criminal applications, self-driving cars, automated medical algorithms, etc.

Also, plagiarism detectors are less impactful because they can point to a source and the teacher can do a human review to determine whether they think it’s too closely copied. That doesn’t work for AI detection. It’s all based on vibes, which can disproportionately impact minority populations (like neurodivergent and ESL students). I also highly doubt that hundreds of thousands of students are falsely accused of plagiarism each year, but I can’t prove it.

As for the alternative? I don’t think there is one single silver bullet. IMHO we need structural change.

-

Just to summarize, and please correct me, Trashcan thinks that SOME amount of false positives (1%) using tools is acceptable in the fight against AI and Faraday thinks that ZERO amount of false positives using tools is acceptable in the fight against AI? Am I understanding that you think its better to trust your gut here, Faraday?

-

@Faraday said in AI Megathread:

IMHO we need structural change.

Agreed. It’s fundamentally not even really an “AI” problem at its core, but a sort of “humans relying on authorities instead of thinking” problem.

-

@Yam That isn’t exactly what I said. It’s a complex issue requiring multiple lines of defense, better education, and structural change. But I am saying that even 99% accuracy is too low.

For example, say you have a self-driving car. Are you OK if it gets into an accident 1 out of every 100 times you drive it?

Say you have a facial recognition program that law enforcement leans heavily on. Are you OK if it mis-identifies 1 out of every 100 suspects?

I’m not.

1% failure doesn’t sound like much until you multiply it across millions of cases.

-

@Yam

I think that some amount of mistakes in any system are acceptable. Nothing is flawless. To me the barrier that a system needs to clear is “better than any alternative”.In AI detectors, we’ve already seen that most of the time, people unassisted get it right only 50-60% of the time. Certain detectors are performing at level where less than 1% of results are false positive. That seems better.

@Faraday said in AI Megathread:

say you have a self-driving car. Are you OK if it gets into an accident 1 out of every 100 times you drive it?

There were about 6 million auto accidents in 2022. If the self-driving car (extrapolated to the whole population) would have caused 5 million accidents, it would be better.

@Faraday said in AI Megathread:

Say you have a facial recognition program that law enforcement leans heavily on. Are you OK if it mis-identifies 1 out of every 100 suspects?

If this facial recognition program does a better job than humans, yes I am okay with it. Humans are notoriously poor eye witnesses.

Eyewitness misidentification has been a leading cause of wrongful convictions across the United States. It has played a role in 70% of the more than 375 wrongful convictions overturned by DNA evidence. In Indiana, 36% of wrongful convictions have involved mistaken eyewitness identification.

@Pavel said in AI Megathread:

but a sort of “humans relying on authorities instead of thinking” problem

There are cases when humans should rely on authorities instead of thinking. No one is advocating for completely disconnecting your brain while making any judgment, but authoritative sources can and should play a key role in decision-making.

-

@Trashcan said in AI Megathread:

There were about 6 million auto accidents in 2022. If the self-driving car (extrapolated to the whole population) would have caused 5 million accidents, it would be better.

Lol man, I have to agree. I realize that we’re generally anti-generative AI in art/writing here but I’ll be honest, if the computer drives the car better than my anxious ass, I’ll ride along.

-

@Trashcan said in AI Megathread:

There were about 6 million auto accidents in 2022. If the self-driving car (extrapolated to the whole population) would have caused 5 million accidents, it would be better.

Making cities walkable would be far better than throwing more money into the abyss that cities become when they’re overrun by self-driving cars.

-

@Yam said in AI Megathread:

if the computer drives the car better than my anxious ass, I’ll ride along.

That’s a big “if” though, and is the crux of my argument.

@Trashcan said in AI Megathread:

If this facial recognition program does a better job than humans, yes I am okay with it. Humans are notoriously poor eye witnesses.

The difference is that many people know that humans are notoriously poor eye witnesses. Many people trust machines more than they trust other humans, even when said machines are actually worse than the humans they’re replacing. That’s the psychological effect I’m referring to.

-

@Jumpscare said in AI Megathread:

@Trashcan said in AI Megathread:

There were about 6 million auto accidents in 2022. If the self-driving car (extrapolated to the whole population) would have caused 5 million accidents, it would be better.

Making cities walkable would be far better than throwing more money into the abyss that cities become when they’re overrun by self-driving cars.

Unfortunately, tech bros would rather reinvent bandaid solutions over and over again instead of actually working to improving the future.

-

@Jumpscare Walkable cities is a whole 'nother can of worms.

-

@Jumpscare Totally, but don’t let the perfect be the enemy of good.

-

@Trashcan It is part of ‘Turnitin’ which is pretty widely used. I have no idea if it’s one of the ones you’ve listed here, or which one if it is.

Part of what’s exasperating about it is that it doesn’t give me any clue as to why it is tagging segments as “likely AI generated” so even if I don’t spot some way that makes it seem likely that it’s wrong, what possible use is it?

It would be ironic to the point of grotesque in the context of a class where I spend the whole time saying, “Why do you believe that?” and “Prove it,” and “Where’s the evidence?” and “Does that research methodology work? Do you think the result mean what the reaseachers say it means? Did the newspaper report say it means what the researchers said it means?” and so on. After that I’m gonna roll up and say, “Hey, a computer program using semi-secret methodology to detect AI says you cheated, so did you?” to a student?

I get @Faraday’s comments about people trusting computers in a weird way, but I guess I don’t share that, because I feel like I may as well draw tarot cards and just say anybody who gets an inverted swords card cheated.

-

@Gashlycrumb how did you know about the method I used to grade papers when I was a TA?

-

@Trashcan said in AI Megathread:

No one is advocating for completely disconnecting your brain while making any judgment

I know that. You know that. But people are idiots and will entirely defer to an authority. Education is always ten years behind technology, and laws are fifteen years behind that.

-

While that ChatGPT Wikipedia guide is sort of okay, here are some really obvious tells that I’ve noticed.

“It’s not x. It’s y.”

“It’s x. And that matters.”

ChaGPT also likes the words “weight” and “pressure” a lot.

Here’s a style example:

It writes like this. Short sentences. And then starting a sentence with a conjunction. That’s why each paragraph has weight, and that matters.

-

@InkGolem said in AI Megathread:

“It’s not x. It’s y.”

The reason that this—or anything else GenAI does—comes up frequently is because the algorithms are recognizing patterns in actual writing. It doesn’t make this stuff up out of thin air.

Now yes, sometimes it uses those constructs in the wrong place / wrong way and that can be a tell. Or you can find it in weird places, like an email from your friend. But the construct itself isn’t a tell of AI use. The AI is just copying what it sees.

For example, from a few book sources in the 1900’s:

It’s not about dieting. It’s about freedom from the diet.

It’s not a newspaper. It’s a public responsibility.

It’s not being moved. It’s simply joy.

It’s not love. It’s something higher than love.

It took about 60 seconds to find these and a zillion other instances on a Google Ngram search of published literature.

-

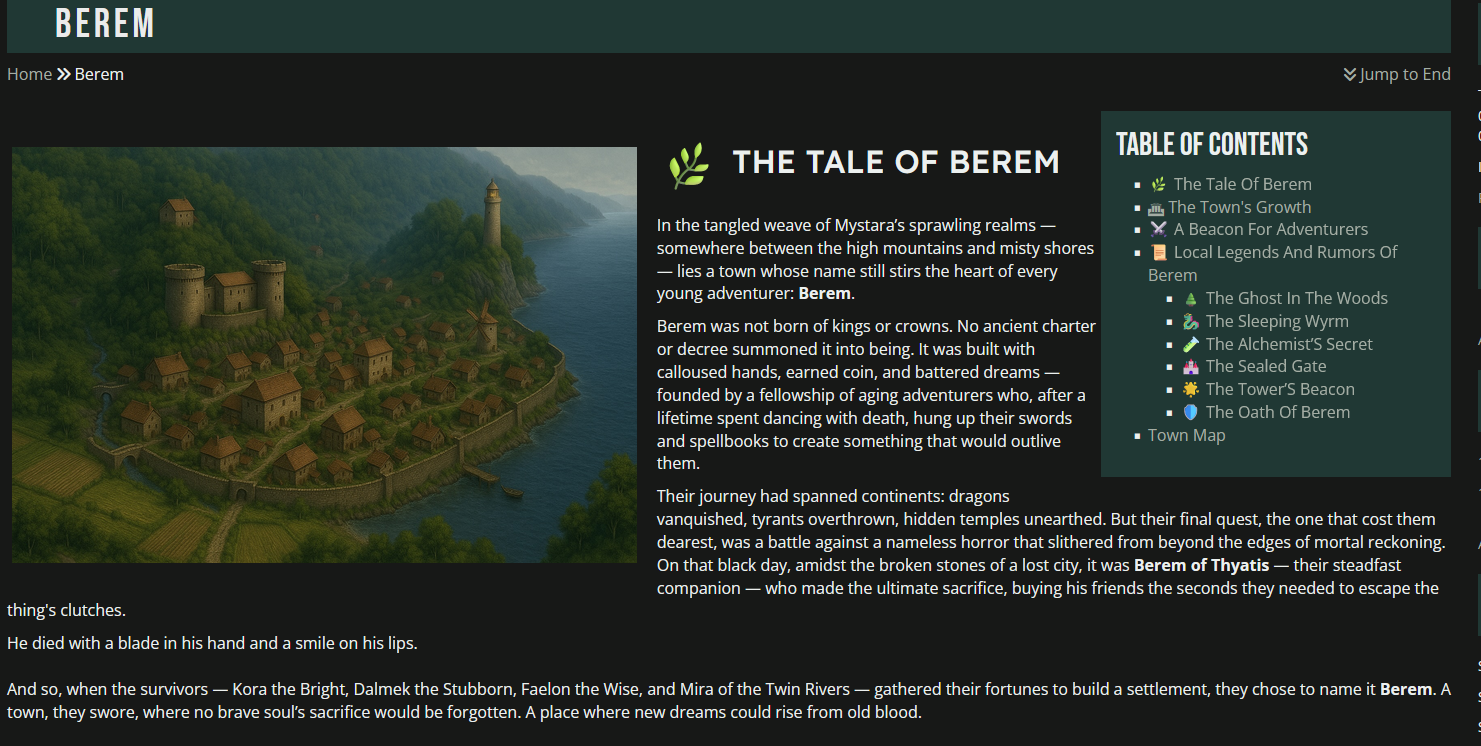

I’m not trying to start anything, but a lot of this description is kind of why I shied away from Berem (aside from losing all time to do anything outside of work, family, sleep).

No shade for anyone using AI in code; to be clear, I don’t know if that’s the case here. Also, I’m not even sure if they actually used AI in the setting writing, but it just hits 100% of all of the hallmarks described above, including the use of emojis.

And the text itself:

The Town’s Growth

The Town’s GrowthBerem was carved from wild woods and stubborn fields. The founders built it where river met forest, shielded by cliffs and watched over by a shimmering lake. They poured their remaining magic and wealth into it: a stout Town Hall to govern, a Marketplace to trade, an Inn for wanderers, and a Temple so Berem’s spirit would be forever honored.

Word soon spread across Mystara’s Known World — not of a bustling metropolis, but of a place where young heroes could find their first footing. Here, a novice could earn coin protecting caravans, clearing nearby ruins, or rooting out trouble in the thick woods. Berem’s Tavern became famous for its worn quest board, where calloused hands posted cries for help — and new legends were born.

The town’s location remains a curiosity. Some maps mark Berem near Darokin, others whisper it lies close to the edges of the Five Shires, or in the misty borderlands beyond Karameikos. Berem itself cares little for such speculations. It belongs to the world, and to those bold enough to find it.

️ A Beacon for Adventurers

️ A Beacon for AdventurersBerem today remains what it has always been:

- A starting point for wanderers, outcasts, and dreamers.

- A resting place for those who need healing or hope.

- A memorial to courage — not grand, but real.

Its harbor welcomes merchant ships. Its smithy forges the blades that will one day sing in distant halls. Its temple bells toll for the fallen and the triumphant alike. And every so often, beneath the light of the high tower’s beacon, a new band of adventurers sets forth — hearts full of hope, blades newly sharpened — carrying the spirit of Berem into the wide, wild world.

They say if you stand quietly at the town’s entrance when the mist rolls in, you might just hear Berem’s laughter on the breeze — urging you onward.

I’m not sure why I had such a visceral reaction to it. Generally speaking I don’t care if people use AI in code, and I will usually have a kind of ‘meh’ reaction to seeing AI art (especially the piss-filter images on the NPC pages on the Berem site), but the writing just took me entirely out of it.

If I had to guess, it’s because I can see my own motivations for using AI code or something to help facilitate a location where I can tell a specific story. If you use AI for everything altogether, then I wonder what the point of having a writing-based game is, or why you would create a unique location in a world at all. Berem is not, to my knowledge, part of the Mystara canon, so if you have an idea for a cool little adventurer town, why not write it up yourself? Idk. This also is likely not completely on topic, since most of the discussion is on AI in the real world rather than in our corner of it.

-

@somasatori Yeah. It looked like AI to me and it’s not the only game out there that looked like it had AI content to me. I don’t personally care as much about code, probably bc I’m not a coder, but the content is the heart and soul of it for me, and it sucks to see.

-

Yeah, that absolutely reads as LLM slop to me. BOooo