@Yam

I prefer web-based by a mile. It is much easier (for most users) to navigate theme files and fill out a form on a web page than running individual commands which all continually push data further up the buffer.

Posts

-

RE: Web-based CharGen or in-game CharGenposted in Game Gab

-

RE: AI In Posesposted in Rough and Rowdy

@Faraday

This white paper, published by Originality.ai, which concluded that Originality.ai is the best checker, includes 6 studies in the chart your screenshots come from. 2 of those are from 2023. The newest is from June 2024. Since the article is marked November 2025, I have to question why nothing newer is included.If there is any takeaway from what I’ve argued in these threads, it is that specifics matter and none of this is static. That is why opposition to GenAI cannot be based on the quality of the experience, it must be based on the nature of the experience. “I don’t want to RP with GenAI because it’s not very good” is not going to age well as a position.

-

RE: AI In Posesposted in Rough and Rowdy

@Trashcan

I had to wait for my free Pangram checks to tick over, but out of curiosity I put the AI generated text above into GPTZero and Pangram.

And then I checked @Tez’s original desc.

Not a huge sample size, of course, but I thought it was interesting.

-

RE: AI In Posesposted in Rough and Rowdy

@Hobbie said in AI In Poses:

With the amount of time investment needed to get AIs these days to do absolutely anything right (see the other thread where I said AI is getting dumber), by the time you carefully cultivate an LLM to write a pose that actually makes sense within the context of the scene, before even making it fit your writing style, you might as well have just written it out yourself.

We have to let this one go. It just is not representative of the experience today. I do not use these tools, but Copilot is installed on every Windows device now and it is trivially easy to try this yourself.

I gave it a desc @Tez wrote and asked it to make a new desc in the same style for a woman.

It used too many exact phrases from the original so I simply asked it to try again.

I gave it some basic instructions about how to pose and intro’d ‘my guy’.

I realized I needed to tell it not to write like a writer (we don’t powerpose) and I was in business.

From here on all I have to do is paste a scene partner’s poses and I could keep ‘Evelyn’ rattling away perpetually with responses coming back to me in 5 seconds.

Again, this took less than 10 minutes. We need to be aware of where these tools actually are.

-

RE: AI In Posesposted in Rough and Rowdy

@Faraday

This is a question about the individual service, not the entire category. For instance, Pangram’s policy:Pangram does not train generalized AI models like ChatGPT, and our AI detection technology is based off of a large, proprietary dataset that doesn’t include user submitted content.

We train an initial model on a small but diverse dataset of approximately 1 million documents comprised of public and licensed human-written text. The dataset also includes AI-generated text produced by GPT-4 and other frontier language models. The result of training is a neural network capable of reliably predicting whether text was authored by human or AI.

If you refuse to use any technology that relies on machine learning, algorithms, or neural networks regardless of the specifics then obviously that is your prerogative but you are going to have a hard time using the internet at all.

-

RE: AI Megathreadposted in No Escape from Reality

@Yam

I think that some amount of mistakes in any system are acceptable. Nothing is flawless. To me the barrier that a system needs to clear is “better than any alternative”.In AI detectors, we’ve already seen that most of the time, people unassisted get it right only 50-60% of the time. Certain detectors are performing at level where less than 1% of results are false positive. That seems better.

@Faraday said in AI Megathread:

say you have a self-driving car. Are you OK if it gets into an accident 1 out of every 100 times you drive it?

There were about 6 million auto accidents in 2022. If the self-driving car (extrapolated to the whole population) would have caused 5 million accidents, it would be better.

@Faraday said in AI Megathread:

Say you have a facial recognition program that law enforcement leans heavily on. Are you OK if it mis-identifies 1 out of every 100 suspects?

If this facial recognition program does a better job than humans, yes I am okay with it. Humans are notoriously poor eye witnesses.

Eyewitness misidentification has been a leading cause of wrongful convictions across the United States. It has played a role in 70% of the more than 375 wrongful convictions overturned by DNA evidence. In Indiana, 36% of wrongful convictions have involved mistaken eyewitness identification.

@Pavel said in AI Megathread:

but a sort of “humans relying on authorities instead of thinking” problem

There are cases when humans should rely on authorities instead of thinking. No one is advocating for completely disconnecting your brain while making any judgment, but authoritative sources can and should play a key role in decision-making.

-

RE: AI Megathreadposted in No Escape from Reality

@Faraday

There was cheating before AI and there were false accusations of cheating before AI detectors. Being falsely accused of using AI is no more serious than being accused of plagiarism.What is the alternative?

-

RE: AI Megathreadposted in No Escape from Reality

@Gashlycrumb

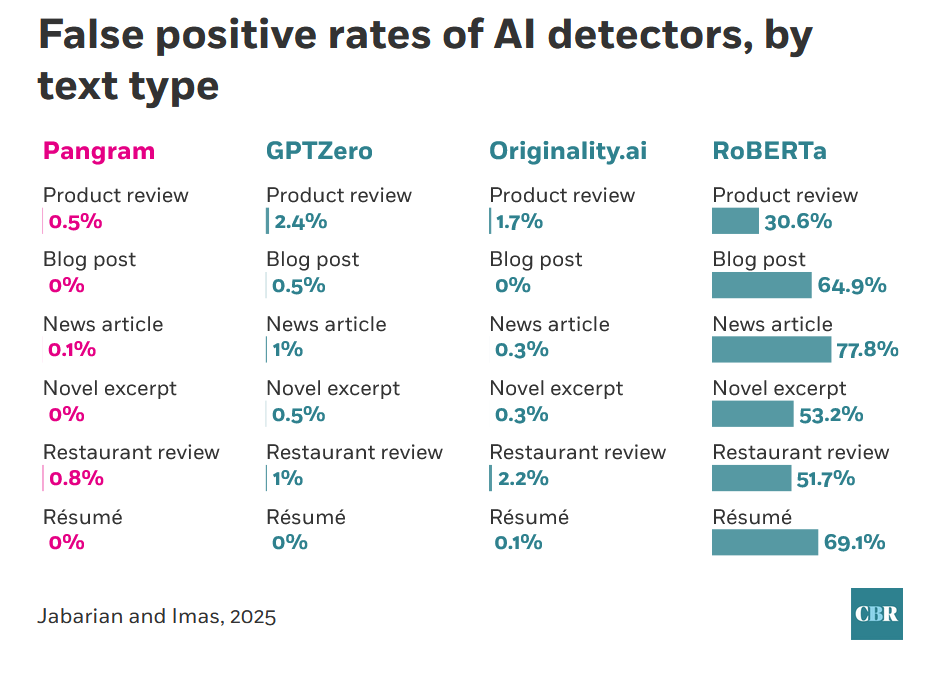

That does sound frustrating, and while I’ve been in defense of the odds of AI detectors not falsely accusing people throughout this thread, it’s still worth noting that even recent studies find wide disparities between product offerings. From one of the studies already linked:

Clearly RoBERTa, the open-source offering, is not something anyone should be using. I hope there’s some sort of feedback mechanism to the administration that the particular tool they’ve selected is highly unsuited to the task.

-

RE: AI Megathreadposted in No Escape from Reality

@Faraday said in AI Megathread:

For example, from the Univ of San Diego Legal Research Center:

If we’re getting down to the level of sample size and methodology, it’s probably worth mentioning that this study looked at 88 essays and ‘recent’ in this context was May 2023, or 6 months after the release of ChatGPT. It is safe to assume the technology has progressed.

-

RE: AI Megathreadposted in No Escape from Reality

@Pavel said in AI Megathread:

are very small in scale

By using the method described above, we create 6 datasets with around 20K samples each

The researchers built a dataset of about 2,000 human-written passages spanning six mediums: blogs, consumer reviews, news articles, novels, restaurant reviews, and résumés. They then used four popular large language models to generate AI versions of the content by using prompts designed to elicit similar text to the originals.

What would you consider an acceptable scale?

@Faraday said in AI Megathread:

You cited a study with a microscopic sample size and flawed methodology,

Fair enough, I can’t find any similar studies with a larger sample size. Most other studies find odds statistically significantly better than a coin flip, somewhere between just barely and the upper 60%s.

@Pavel said in AI Megathread:

The odds of someone familiar with AI output putting it through two different commercial AI detectors in the real world are almost laughably small

Even if we grant that only 2 events must occur, the suspicion (we’ll go with a 50/50) and a single check (with an average from the commercial offerings of 1% false positives), if you’re approaching your 50’s, you’re still more likely to die in a given year than for this to happen to you (0.5%). These tools are aware of the negative ramifications of a false positive and are biased towards not returning them.

-

RE: AI Megathreadposted in No Escape from Reality

@Pavel said in AI Megathread:

The only way you can truly tell if writing is LLM generated and not simply a style you’ve come to associate with LLM is to be comparative.

This is not true; people who are very familiar with AI-generated text can identify it accurately 90% of the time without any access to ‘comparative’ sources.

@Aria said in AI Megathread:

Anything I write professionally would almost certainly be pegged as written by AI,

@Pavel said in AI Megathread:

various institutions are using flawed heuristics – be they AI-driven or meatbrain – to judge whether something is written by an LLM

The fear that human-generated content is going to be flagged as written by AI is mostly overblown. People who are not familiar with AI are not good at detecting it, but when you see stats about how AI detection tools are “highly inaccurate”, that statistic is almost always referring to AI not being flagged (evasion), not false positives. Various studies have found commercial AI detector tools to have very low levels of “false positives”: GPTZero identified human content correctly 99.7% of the time, and Pangram also identified human content correctly over 99% of the time, while Originality.ai did slightly less well at only 98+% of the time.

If we take these numbers at face value, the odds of someone familiar with AI output identifying a piece of writing as suspect and putting it through two different commercial AI detectors and both of them flagging it as AI when it was, in fact, human-written, is in the neighborhood of 0.002%. You’re more likely to die in a given year than to have this happen to you. I’m personally comfortable with that level of risk.

The odds of someone unfamiliar with AI output accusing you off the cuff of AI use and being wrong about it are about 50%. So. You know. Watch out for that one.

-

RE: Banning Bad, Actually?posted in Game Gab

Anyone who is rude/a chore/a hassle/a drain/mean/a liar when dealing with staff (and let us leave room for grace and assume this is more than an isolated incident) is doing the same shit to everyone else.

If I’m on staff and it’s persistently unpleasant for me to deal with you, I’m not going to inflict you on anyone else either. “Then your game will just become the type of people you personally don’t find unpleasant.” I know, sounds pretty cool to me.

-

RE: Banning Bad, Actually?posted in Game Gab

Having recently played on a game where banning/asking to leave was applied in what I would describe as a fairly liberal manner and for many years on games where it was, in fact, almost impossible to get banned, the experience was dramatically more positive on the game that was relatively zero-tolerance for bad behavior. It was a smaller game than the other ones but, and I cannot overstate this, that was a small price to pay.

Everyone has a bad day sometimes. Sometimes people are bad at regulating how that affects the people they interact with. It does happen. But as the philosopher G.W.Bush once said, fool me once, shame on you. Fool me- you can’t get fooled again.

-

RE: Banning Bad, Actually?posted in Game Gab

@Faraday said in Empire Discussion Thread:

I know this is gonna’ be hard to believe for some folk, but you can actually have a game of relatively decent people that, even on their off days, won’t be particularly rude or pushy directly to the game runners. It might not be a BIG game, but from what I gather, it doesn’t look like most staffers want to staff big games anyway.That’d be nice, but I have never in my life been on such a game.

I don’t know, man. The last game I was on, somebody was rude to staff and got banned for it right away, right after the game opened. That game went on to have over 4000 scenes, so it seemed to work out to me, and everyone was pretty chill in their dealings with staff after that, at least to my knowledge.

-

RE: Banning Bad, Actually?posted in Game Gab

@Pacha said in Empire Discussion Thread:

even great players can sometimes have a day where they have a shitty attitude.

A great player probably knows enough to be on their best behavior while I’m getting to know that they’re a great player.

-

RE: Star Wars Age of Alliances: Hadrix and Cujoposted in Rough and Rowdy

I’m starting to feel like the staff on this game are not to be trusted, you guys

-

RE: Historical Games Round 75posted in Game Gab

Since I was namedropped earlier in the thread (thanks @Tez) I felt compelled to post something here.

I think representation is important, and I wouldn’t be inclined to run a historical game that glossed over the historical facts of what it was like to be a member of a group subject to “isms” at that time. I also wouldn’t be inclined to run one that didn’t.

When you put an “ism” into the social contract as allowed content, you immediately open up the expectation that it will be a gameplay factor and you open up the conversation to what is “accurate and allowed” and what is “accurate but not allowed”, and by opening up those things you now have taken on the responsibility for providing it and policing it. This leads to a lot of questions.

Why is this dynamic being included? Do you expect it to enhance the story in some way? Do you have plans to engage with it directly, or will it just be existing in the background? If you have no plans to engage with it directly, what is the benefit of including it? Do you expect people to RP about it? What will that depiction look like? Does that depiction serve to illuminate something about the human experience in a respectful representation or does it serve to create spectacle and story drama purely for entertainment? What will you do about players who are leaning too far into the latter? Can you clearly define where that line is? What will you do if YOU are the one who crossed the line and it’s been brought to your attention?

Regardless, the expectation should be that a game clearly state on the tin exactly what unconscionable things might occur to your character in the course of play. Do not leave players to discover this through play. If my guy could be killed, say they could be killed. If they could be sexually assaulted, say they could be sexually assaulted. If they could be discriminated against, say they could be discriminated against and how it could look. Gritty Games are allowed. Please put the Narrative Facts on the side. I can choose if this is right for my diet.

-

RE: Just because it's your fave don't mean it ain't literatureposted in No Escape from Reality

I don’t know if it was intentional, but Materialists is Sweet Home Alabama.